This is a series of articles looking at the Minisforum AI X1 Pro running Linux.

Unlike many mini PCs, the AI X1 Pro has a very capable integrated GPU. I want to see what performance is like running machine learning software on the Radeon 890M.

In this article I’m looking at Gerbil, a desktop app designed to let you run Large Language Models locally with the minimum of fuss. The software is powered by KoboldCpp which itself is a highly modified fork of llama.cpp.

Installation

I evaluated Gerbil with the latest release of their AppImage downloaded from their GitHub repository. At the time of writing that’s Gerbil 1.4.3.

Make the file executable with the command:

$ chmod u+x Gerbil-*.AppImage

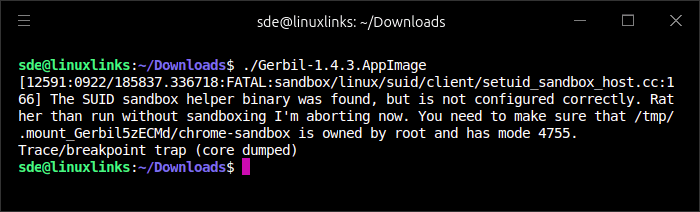

When trying to run the AppImage, you may get an error along the lines of

This may confuse newcomers as there isn’t actually a file /tmp/.mount_Gerbil5zECMd/chrome-sandbox.

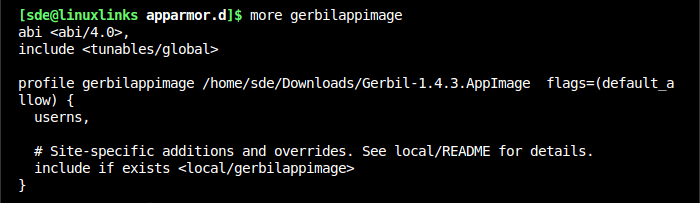

My recommended way to resolve this type of issue is to create a file /etc/apparmor.d/gerbilappimage (e.g. sudo nano /etc/apparmor.d/gerbilappimage). with the following lines:

You’ll need to edit the profile line to where you’ve saved the AppImage.

Then issue the command:

$ sudo systemctl reload apparmor.service

Next page: Page 2 – Running Gerbil

Pages in this article:

Page 1 – Introduction

Page 2 – Running Gerbil

Complete list of articles in this series:

| Minisforum AI X1 Pro | |

|---|---|

| Introduction | Introduction to the series and interrogation of the machine |

| Benchmarks | Benchmarking the Minisforum AI X1 Pro |

| Power | Testing and comparing the power consumption |

| Jan | ChatGPT without privacy concerns |

| ComfyUI | Generate video, images, 3D, audio with AI |

| AMD Ryzen AI 9 HX 370 Cores | Primary (Zen 5) and Secondary Cores (Zen 5c) |

| Gerbil | Run large language models locally |

| Neural Processing Unit (NPU) | Introduction |

| Gaia | Run LLM Agents |

| Noise | Comparing the machine's noise with other mini PCs |

| Bluetooth | Fixing Bluetooth when dual-booting |

| BIOS | A tour of the Basic Input/Output System |

Do you have any information about using the NPU that comes with the CPU?

I will be investigating NPU-accelerated software but things are in an early stage development wise for Linux.