Last Updated on November 22, 2025

An NPU (or Neural Processing Unit) is a dedicated hardware processor designed to accelerate neural network operations and AI tasks. They are designed to help handle the complex parallel computations needed for tasks like image recognition, video processing, natural language processing, and voice commands. For most consumer products, the NPU is integrated into the main CPU. But they can be an entirely discrete processor on the motherboard.

NPUs are designed to complement the functions of CPUs and GPUs. While CPUs handle a broad range of tasks and GPUs excel in rendering graphics as well as AI tasks, NPUs specialize in executing AI-driven tasks with lower power consumption.

This is a blog looking at using the AMD Ryzen AI 9 HX 370’s NPU in Linux. The AMD Ryzen AI 9 HX 370 is a mobile processor with 12 cores and 24 threads. This processor features the Radeon 890M integrated graphics solution, and a new generation XDNA 2 NPU. Int8 TOPS is rated at up to 80 TOPS combining CPU cores, GPU cores, and NPU.

My testing of the NPU is confined to the Minisforum AI X1 Pro mini PC. This set of NPU-focused articles is really designed to help me, but hopefully it will interest others. If you’ve got specific software working under Linux using the NPU, I’m very interested in hearing your thoughts. Drop a comment below.

My objective is to summarise my experiences and act as an aide-mémoire. The articles will be frequently modified (read corrected) and updated. I include these articles in my Minisforum AI X1 Pro series even though the focus is AMD’s processor. Any plaudits and criticisms about the NPU fall squarely on the shoulders of AMD and Linux.

I’m testing the AMD CPU/GPU/NPU under Ubuntu 24.04 (and 25.04) and also Manjaro. The first task is to test is whether the NPU is recognised in Linux.

The AMD XDNA-based NPU is supported via the amdxdna kernel driver, which is included in recent versions of the Linux kernel and enabled automatically in my Ubuntu and Manjaro distributions. For reference, Ubuntu needs to be at least version 22.04. The kernel needs to be v6.10 or above.

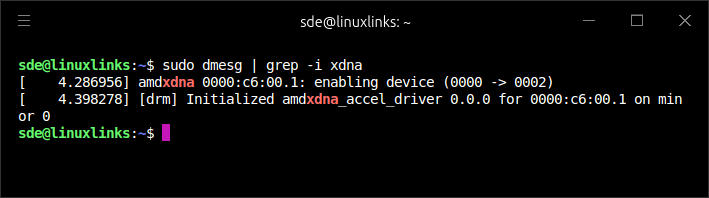

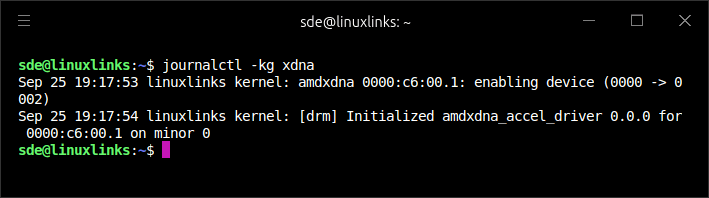

I can verify that the NPU is detected and initialized with one of the following commands:

$ sudo dmesg | grep -i xdna

$ journalctl -kg xdna

The output confirms the NPU has been detected and initialized.

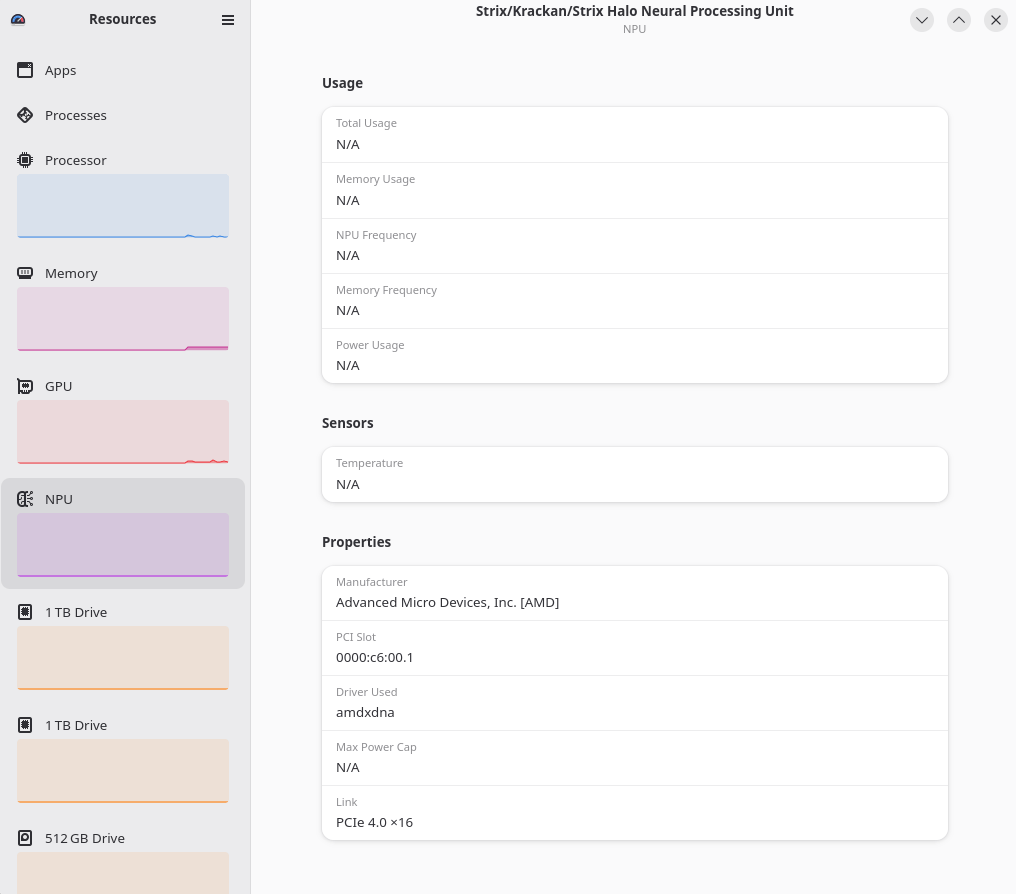

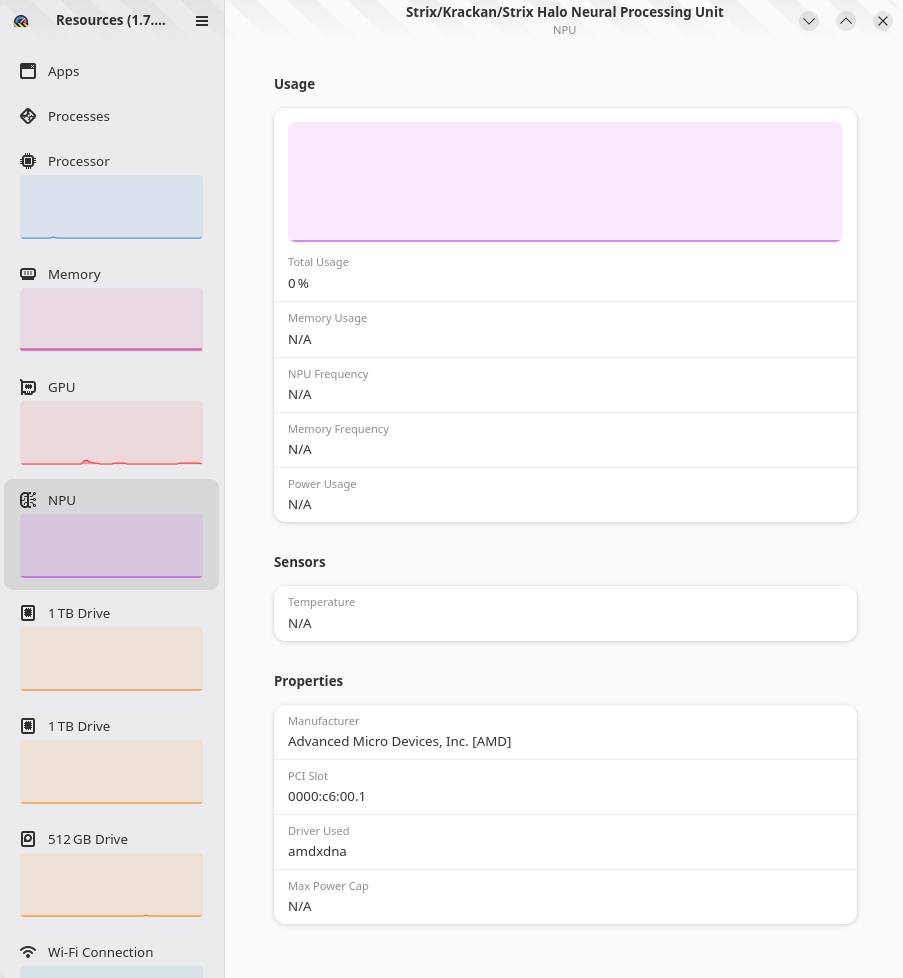

I want to monitor NPU usage. I’ve been looking for a simple command-line tool to show NPU usage (like an equivalent to rocm-smi which monitors GPU usage), but I’ve drawn a blank here. I monitor NPU usage using the Resources utility with an Intel Ultra Core 7 255H (which also has an NPU), so that’s an obvious starting point. Resources is a useful GUI that checks the utilization of system resources.

Launching Resources shows the following screen.

That’s not looking good. NPU usage is just showing as n/a. A bit of digging into the project’s GitHub page reveals that the developer has a branch where support for amdxdna is included but it doesn’t appear to have been tested by users.

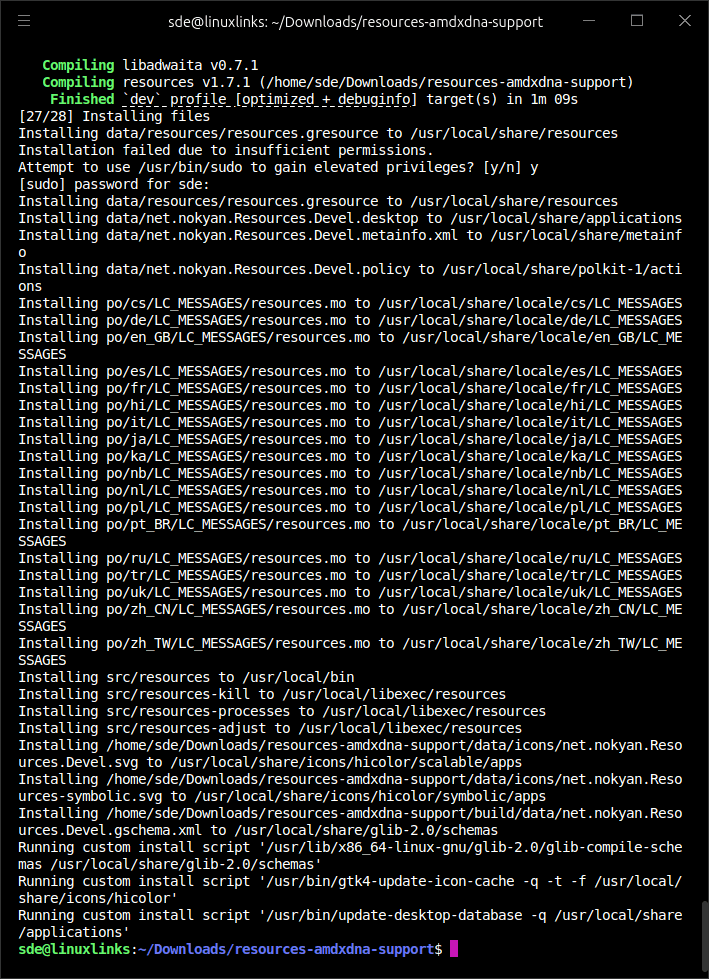

Let’s build that version. I downloaded the zip file (resources-amdxdna-support.zip) from the branch, and extract it. On a vanilla Ubuntu 25.04 system, I need to install a few dependencies.

$ sudo apt install meson rustc libglib2.0-dev libgtk-4-dev libadwaita-1-dev cargo gettext

Now I build Resources with the commands:

$ cd resources-amdxdna-support

$ meson . build --prefix=/usr/local

$ ninja -C build install

The program builds successfully. The binary is stored in /usr/local/bin

OK, now Resources is showing an entry (0%) for total usage, but this doesn’t necessarily mean that it’ll monitor NPU usage.

TO DO

- Find the simplest way to monitor NPU usage in Linux.

- Determine whether Resources is reporting NPU usage correctly. If it is, let the developer know via GitHub.

- Start testing software that uses the NPU.

Complete list of articles in this series:

| Minisforum AI X1 Pro | |

|---|---|

| Introduction | Introduction to the series and interrogation of the machine |

| Benchmarks | Benchmarking the Minisforum AI X1 Pro |

| Power | Testing and comparing the power consumption |

| Jan | ChatGPT without privacy concerns |

| ComfyUI | Generate video, images, 3D, audio with AI |

| AMD Ryzen AI 9 HX 370 Cores | Primary (Zen 5) and Secondary Cores (Zen 5c) |

| Gerbil | Run large language models locally |

| Neural Processing Unit (NPU) | Introduction |

| Gaia | Run LLM Agents |

| Noise | Comparing the machine's noise with other mini PCs |

| Bluetooth | Fixing Bluetooth when dual-booting |

| BIOS | A tour of the Basic Input/Output System |

Thanks. I just found out onnxruntime.ai can run on NPU. I’m excited for the day where Ollama and llama.cpp will be able to use the NPU.

Do you have the AMD Ryzen AI 9 HX 370?

Hi – I have a Minisforum AI X1 Pro as well. Glad to see somebody else is looking for ways to make the NPU work on Linux. As i use Endeavor OS, I cannot run AMD Gaia yet, wich is supposed to utilise the NPU. I would be curious to see what it’s like.

I’m writing up an article on Gaia, hopefully it’ll be ready tomorrow or the next day.

Thanks for this ellaborate article.

I have a 370, and until now I can’t even see if there’s NPU in my APU. Well, not that i tried many things tho. Thanks to you, I’m interested on making use of the NPU again now.

p.s.

I’m on CachyOS and have enough space (2 NVMe and 1 sdcard) for any dual boot system.

Hi Zaky, Like you I have 2 NVMes in my 370 and might add a third although it’s only the first two that get the best transfer rates.

I’ve also booted the machine from external NVMes as the machine has USB 3.2 Gen 2×1 ports which give good transfer rates. I don’t have any external USB4 which would be much quicker though.

I’m still learning but plan to build XRT for Ryzen AI 340 NPU. Looks like xbtop is what you may be looking for which is included. NPUs monitor resources little different after reading the docs. Xilinx XRT provides the APIs(FPGA & AIE) for low level development. Learning lots of new acronyms. 😀

Hope this helps

[Moderation – removed hyperlinks as per the Comment FAQ]

Let us know how you get on! I’ll take a look at xbtop too.